Natural Language Processing — Event Extraction

Extracting events from news articles

The amount of text generated every day is mind-blowing. Millions of data feeds are published in the form of news articles, blogs, messages, manuscripts, and countless more, and the ability to automatically organize and handle them is becoming indispensable.

With improvements in neural network algorithms, significant computer power increase, and easy access to comprehensive frameworks, Natural Language Processing never seemed so appealing. One of its common applications is called Event Extraction, which is the process of gathering knowledge about periodical incidents found in texts, automatically identifying information about what happened and when it happened.

For example:

2018/10 — President Donald Trump’s government banned countries from importing Iranian oil with exemptions to seven countries.

2019/04 — US Secretary of State Mike Pompeo announced that his country would open no more exception after the deadline.

2019/05 — The United States ended with exemptions that allowed countries to import oil from Iran without suffering from US sanctions.

This ability to contextualize information allows us to connect time distributed events and assimilate their effects, and how a set of episodes unfolds through time. Those are valuable insights that drive organizations like EventRegistry and Primer.AI, which provide the technology to different market sectors.

In this article, we’re going to build a simple Event Extraction script that takes in news feeds and outputs the events.

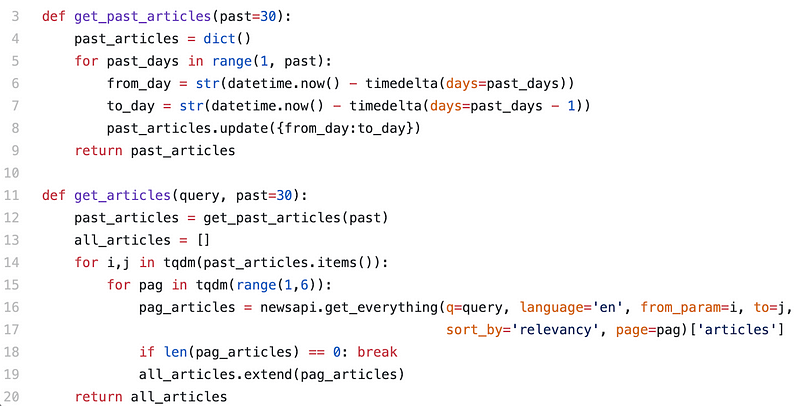

Get the data

The first step is to gather the data. It could be any type of text content as long as it can be represented in a timeline. Here I chose to use newsapi, a simple news source API with a free developer plan of up to 500 requests a day. Following are the functions built to handle the requests:

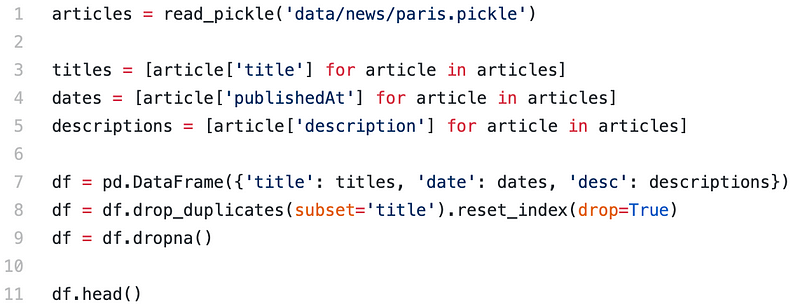

That last function returns a list of approximately 2.000 articles, given a specific query. Our purpose is just to extract events, so, in order to simplify the process, we’re keeping only the titles (in theory, titles should comprise the core message behind the news).

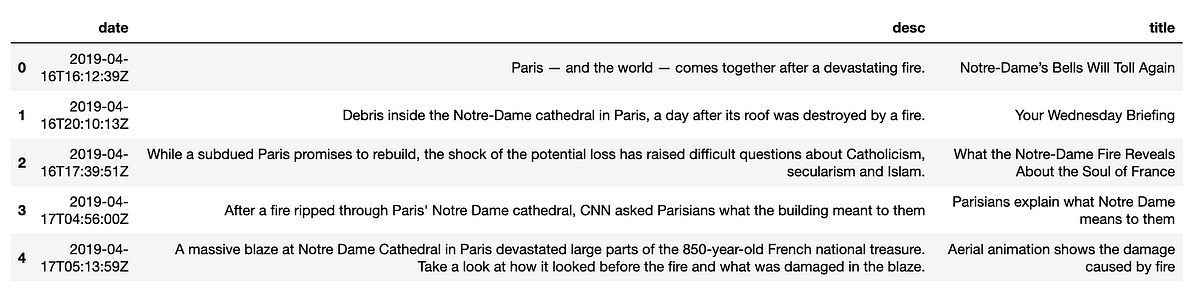

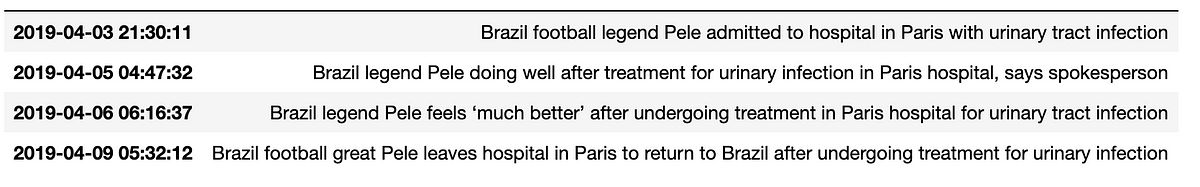

That leaves us with a data frame like the one below, including dates, descriptions, and titles.

Give meaning to sentences

With our titles ready, we need to represent them in a machine-readable way. Notice that we’re skipping a whole stage of pre-processing here, since that’s not the purpose of the article. But if you are starting with NLP, make sure to include those basic pre-processing steps before applying the models → here is a nice tutorial.

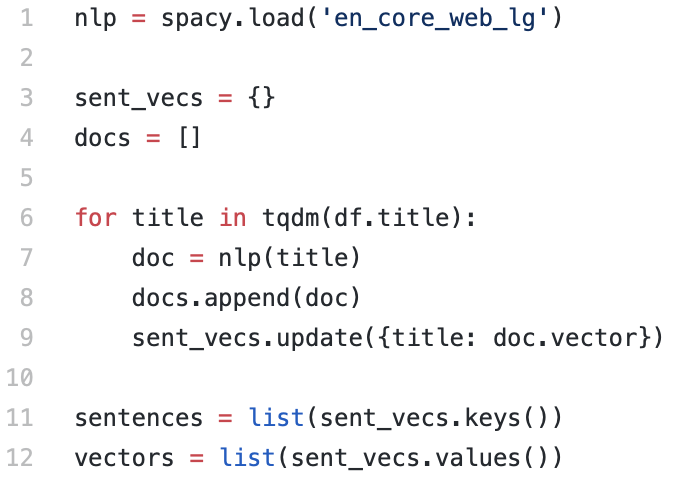

To give meaning to independent words and, therefore, whole sentences, let’s use Spacy’s pre-trained word embeddings model (en_core_web_lg). Alternatively, you could be using any pre-trained word representation model (Word2Vec, FastText, GloVe…).

By default, Spacy considers a sentence’s vector as the average between its token vectors. It’s a simplistic approach that doesn’t take into account the order of words to encode the sentence. For a more sophisticated strategy, take a look at models like Sent2Vec and SkipThoughts. This article about unsupervised summarization gives an excellent introduction to SkipThoughts.

For now, let’s keep it simple:

So, each title will have a respective 300th-dimensional array, as follows:

Cluster those vectors

Even though we are filtering our articles by a search term, different topics can come up from the same query. For instance, searching for “Paris” could result in:

Paris comes together after a devastating fire

Or:

Brazil football legend Pele admitted to hospital in Paris

To group articles from different topics, we’ll use a clustering algorithm.

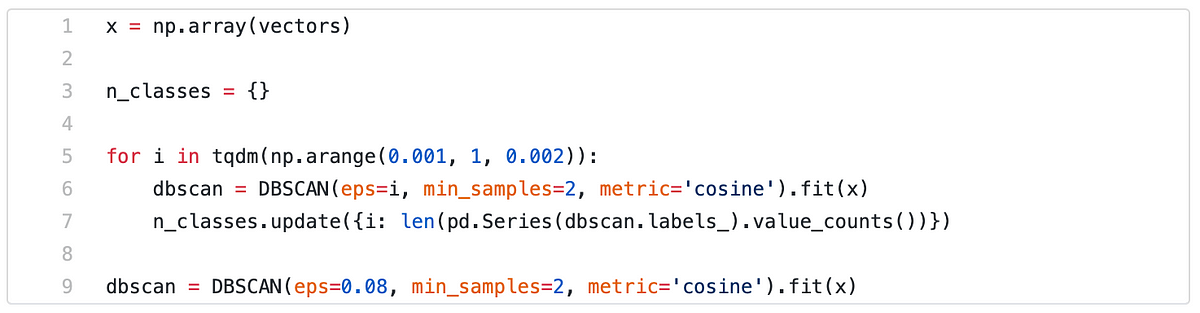

In this particular case, I wanted to try the DBSCAN algorithm because it doesn’t require to previously specify the number of clusters. Instead, it determines by itself how many clusters to create, and their sizes.

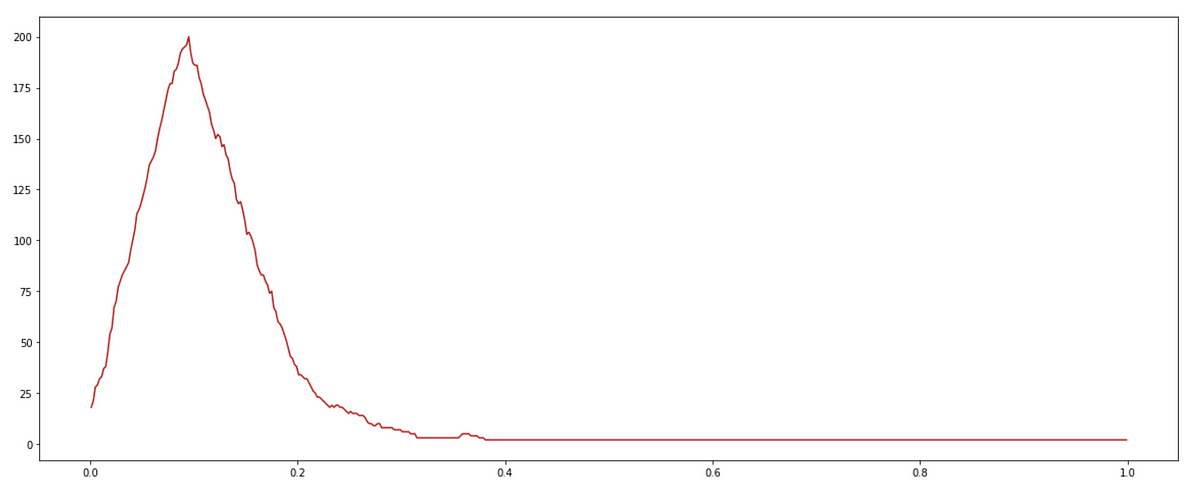

The epsilon parameter determines the maximum distance between two samples for them to be considered as in the same neighborhood, meaning that if eps is too big, fewer clusters will be formed, but if it’s too small, most of the points will be classified as not belonging to a cluster, which will result in a few clusters as well. The following chart shows the number of clusters by epsilon:

Tuning eps value might be one of the most delicate steps because the outcome will vary depending on how much you want to consider sentences as similar. The right value will come up with experimentation, trying to preserve the similarities between sentences without splitting close sentences into different groups.

In general, since we want to end up with very similar sentences in the same cluster, the target should be a value that returns a higher number of classes. For that reason, I chose a number between 0.08 and 0.12. Check out Scikit Learn documentation to find more about this and other parameters.

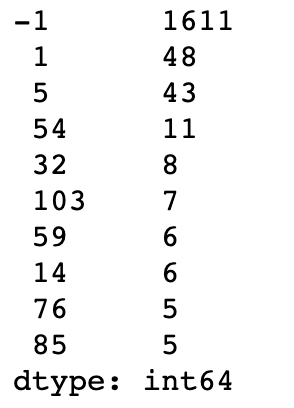

Below are some clusters and their sizes:

The -1 class stands for sentences with no cluster, while the others are cluster indexes. Biggest clusters should represent the most commented topics.

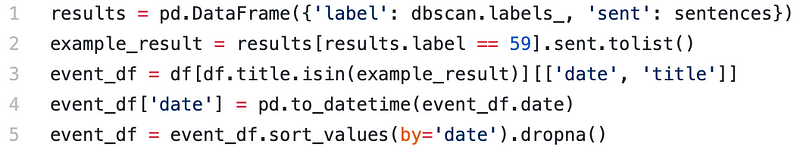

Let’s check out one of the clusters:

Convert into events

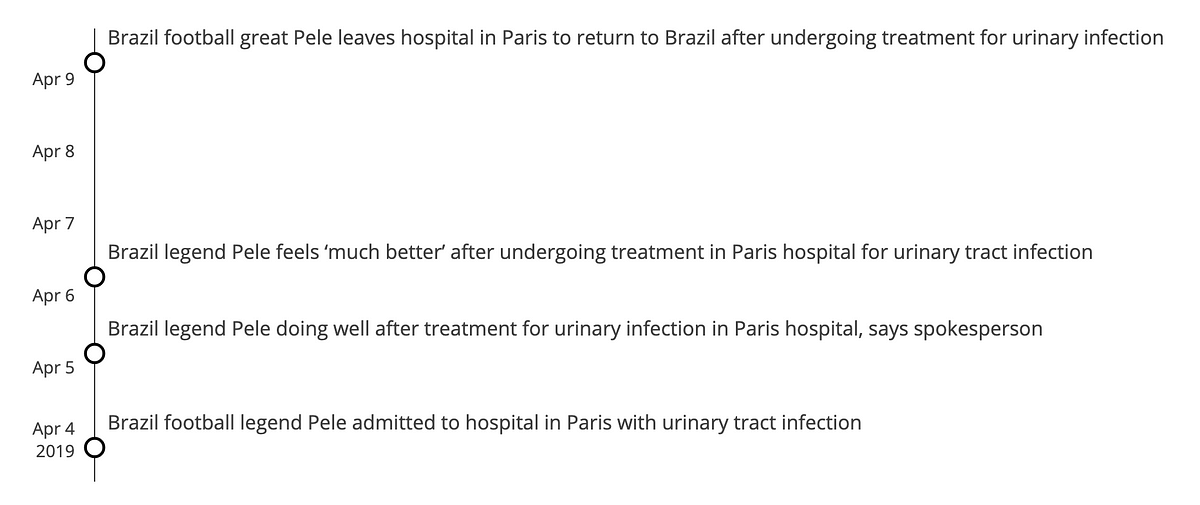

The next step is to arrange those sentences time-wise and filter them by relevance. Here, I chose to display one article per day so that the timeline is clean and consistent.

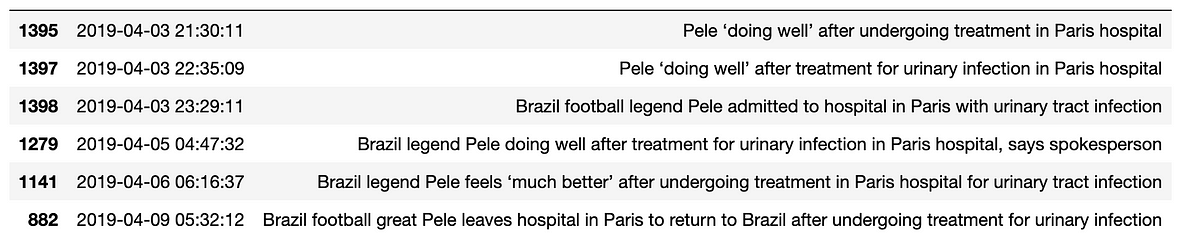

Since there are many sentences about the same topic every day, we need a criterium to pick one among them. It should be the one that best represents the event itself, i.e. what those titles try to convey.

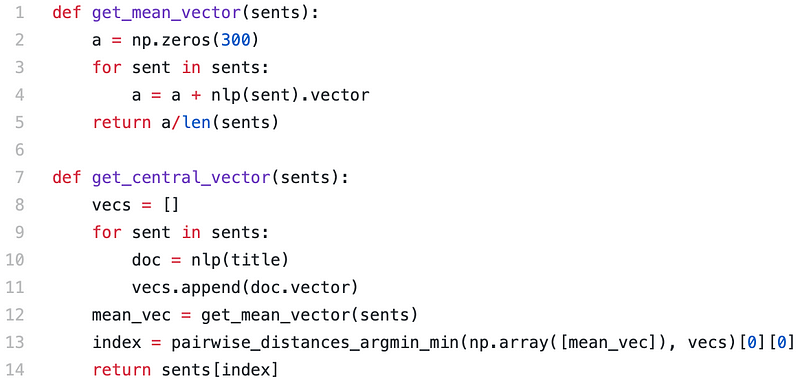

To achieve that we can cluster daily titles and, for each cluster, choose the one closest to the cluster center. Here are the functions to find the central vector given a list of sentences:

Finally, using Plotly, we can figure out a way to plot a handy timeline chart:

That’s it. Using 2.000 random articles we made a script to extract and organize events. Just imagine how useful it might be to apply this technique to millions of articles every day. Take stock markets and the impact of daily news as an example and we can start peeking into the value of Event Extraction.

Several components could be included to improve the results, like properly pre-processing the data, including POS tagging and NER, applying better sentence-to-vector models, and so on. But starting from this point, a desirable result can be reached very quickly.

Thank you for reading this post. This was an article focused on NLP and Event Extraction. If you want more about Data Science and Machine Learning, make sure to follow my profile and feel free to leave any ideas, comments or concerns.