Launch Week Day 3 - Voice Mode 🎙️

Today, we’re thrilled to introduce a cool new feature in Langflow: Voice Mode! 🎤 This new addition allows you to engage in natural, voice-based conversations with any of your Langflow flows.

Today, we’re thrilled to introduce a cool new feature in Langflow: Voice Mode! 🎤

This new addition allows you to engage in natural, voice-based conversations with any of your Langflow flows. This feature is still under active development, and we're eager for you to try it out and share your insights.

Let’s dive in and see how to do it.

How It Works

First, head over to the Playground in Langflow’s interface. You’ll find this in the top-right hand corner of your Langflow UI within any flow.

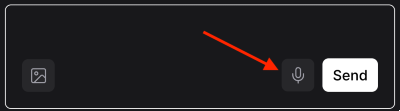

In the bottom right corner of your chat, you’ll find a shiny new microphone icon.🎙️

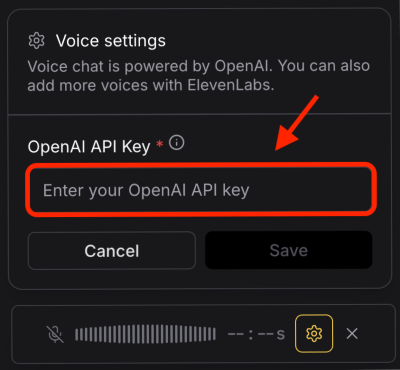

Click the microphone icon, and you’ll be prompted to enter your OpenAI API key. Enter your key and click Save.

(This feature is powered by OpenAI’s voice chat technology. Once your key is entered and saved, it becomes a global variable in Langflow, accessible from any component or flow)

Setting Up Your Voice Chat

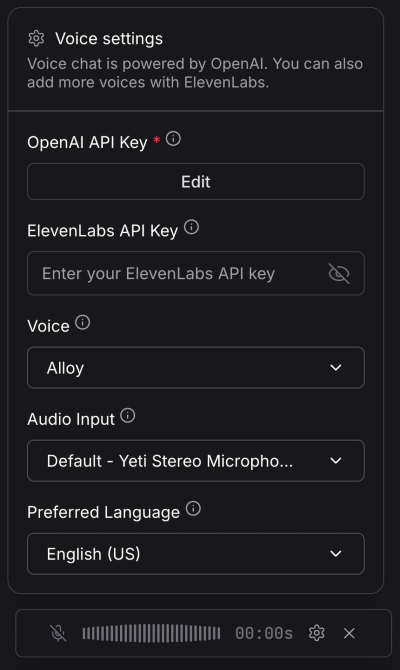

After activation, a configuration dialog appears.

Here, you can:

Choose a Voice

Voice chat comes with a default set of voices from OpenAI, but you can expand this list using ElevenLabs by entering an ElevenLabs API key. (similar to entering your OpenAI key above, when you enter your ElevenLabs key and click away, it will be stored in a global variable)

Select Your Audio Input

Choose your preferred audio input. Note that a higher-quality microphone will improve OpenAI’s voice chat understanding.

Pick Your Language

Select from English, Italian, French, Spanish, or German for enhanced speech recognition. You can also ask your agent to speak to you in various languages.

Start Chatting

Once configured, just start talking. Your voice agent can do anything your normal agent can do. However, in this initial version there are some considerations you should be aware of.

- When using voice mode in agentic flows, use very descriptive tool names and descriptions. This is key to ensure the agent knows exactly what a tool is and what it can do directly from the tool itself, which ties into #2. (you should arguably be doing this anyway for the best agentic experience)

- The voice agent does not use the same agent instructions as defined in your flow which is one reason why #1 above is important. If you have custom agent instructions, don’t expect it will follow those.

- Voice mode only keeps context within the conversation session you are in. If you exit out of the conversation and close the playground, it won’t remember your conversation the next time you start chatting.

Use Voice Mode in Your Apps

It’s one thing to be able to chat with your agent from the playground, but it’s even better to chat directly with your own apps. The new voice mode comes with a websocket endpoint at /api/v1/voice/ws/flow_tts that allows you to interface directly with any langflow flow.

Below is an example using OpenAI’s realtime console websocket implementation.

On the left is Langflow, on the right is the console. You speak to the console, your voice is then transcribed using OpenAI’s TTS (text-to-speech) service, and then processed by your Langflow workflow flow_tts endpoint.

Here’s an example of a fully built websocket endpoint for access to Langflow:

ws://127.0.0.1:7860/api/v1/voice/ws/flow_tts/cc624f50-c695-4e25-bd83-e4497f5cbd1a/relay-session

(In the example above, cc624f50-c695-4e25-bd83-e4497f5cbd1a is the flow ID for our Langflow agent)

OpenAI’s realtime console comes with a relay that you can modify to use your own server.

Here’s a snippet from a basic implementation configured to talk to a Langflow flow:

/ relay.js - WebSocket relay server for text-to-speech functionality

import { WebSocketServer, WebSocket as NodeWebSocket } from 'ws';

import { v4 as uuidv4 } from 'uuid';

/**

* RealtimeRelay - Manages WebSocket connections between a frontend client and Langflow backend

* Primarily handles text-to-speech (TTS) functionality with message queueing and audio response handling

*/

export class RealtimeRelay {

constructor({

flowId = 'cc624f50-c695-4e25-bd83-e4497f5cbd1a',

sessionId = 'relay-session',

host = '127.0.0.1',

port = 7860,

path = '/api/v1/voice/ws/flow_tts',

pingInterval = 30000,

maxQueueSize = 100

} = {}) {

// Connection details

this.flowId = flowId;

this.sessionId = sessionId;

this.path = path;

// Construct backend URL with protocol detection

const protocol = host.includes('localhost') || host.includes('127.0.0.1') ? 'ws:' : 'wss:';

this.backendUrl = `${protocol}//${host}:${port}${path}/${this.flowId}/${this.sessionId}`;

console.log('🔌 Connecting to backend at:', this.backendUrl);

To use this example, clone OpenAI’s realtime console, follow the setup instructions, and replace the default relay.js with this one above using one your flow IDs.

Conclusion

Ready to talk to your flows? Dive into Langflow’s new voice mode today and experience the future of interactive AI.

Now that you know how these new features work, here are some next steps:

- Join the Langflow Discord and let us know what you're building!

- Register for our Launch Week Livestream to hear more about the

1.3release and a HUGE surprise we're announcing on Thursday. - Check out our project on Github and give us a star if you like what you see!